AI, Deep Learning, Generative Models, and More...

And by 'more' I mean LLM's and Security!

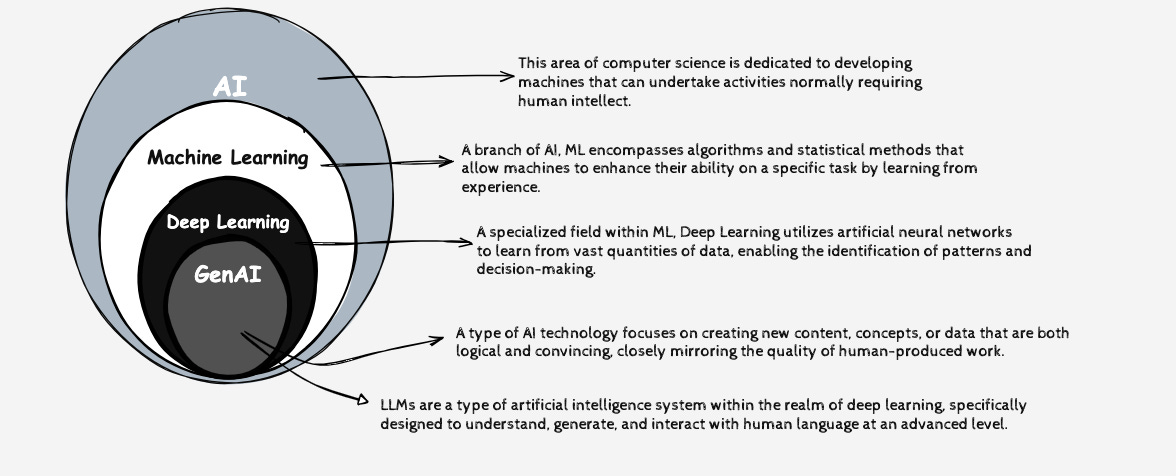

The term 'AI' frequently serves as a catch-all in media and marketing, which can create confusion. This post aims to demystify key concepts such as artificial intelligence, machine learning, deep learning, and generative models. To aid in this explanation, I've started using SketchWow to create visuals. As the saying goes, a picture is worth a thousand words -

Now that we’ve clarified some terminology, let's focus on the main topic of today’s post: Large Language Models (LLMs) and security. As discussed in this article, language models are specialized statistical models designed to predict the next word in a text sequence based on preceding words. When trained on vast datasets, these models evolve into what we know as LLMs, like Gemini, GPT-4, Claude. Employing deep learning techniques, LLMs are able to discern complex patterns in data, enabling them to produce coherent and contextually appropriate text.

But figuring out why deep learning works so well isn’t just an intriguing scientific puzzle. It could also be key to unlocking the next generation of the technology—as well as getting a handle on its formidable risks.

Security Across the Lifecycle of Large Language Models (LLMs)

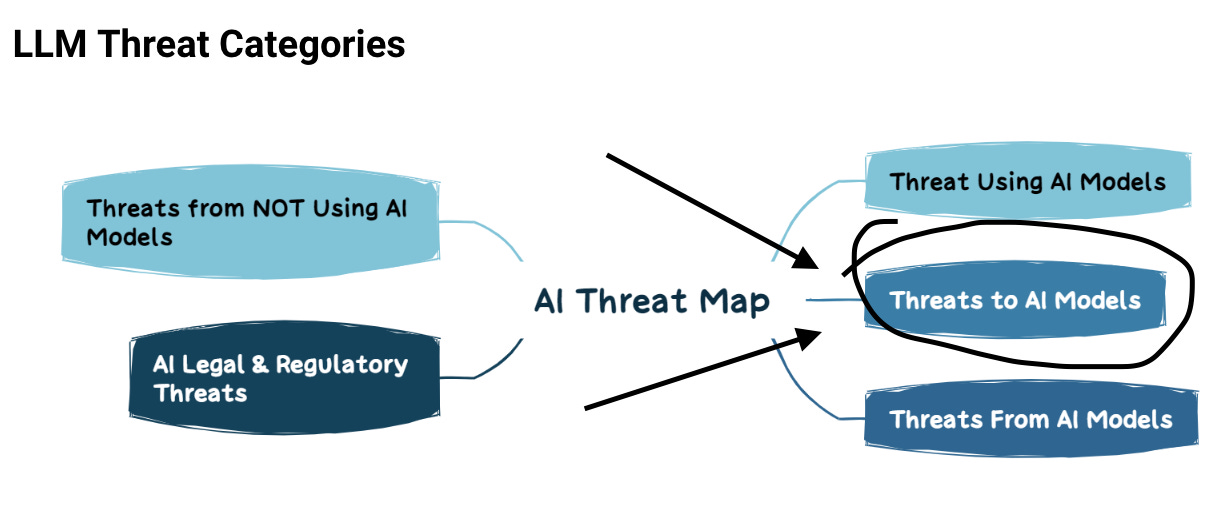

AI Security, much of what is discussed today, involves using artificial intelligence to enhance security measures. For example, AI can detect cyber threats, monitor network activity for suspicious behavior, and identify software vulnerabilities.

Securing AI, the focus of this post, is about protecting AI systems from being compromised. It ensures that AI models, data, and infrastructure are safe from attacks like adversarial attacks (manipulating inputs to deceive AI), data poisoning (corrupting training data), and unauthorized access.

When it comes to securing Large Language Models (LLMs), it’s essential to consider different layers—from pre-training and fine-tuning to runtime operations. Incorporating operational frameworks like LLMOps and MLOps is crucial for maintaining security throughout the entire process. Let's unpack these ideas in a more digestible way:

Layered Security for LLMs

Pre-Training: Protecting the initial training data and processes to prevent manipulation or corruption.

Fine-Tuning: Ensuring secure updates and modifications to the model.

Runtime Operations: Safeguarding the deployed model against real-time threats and unauthorized access.

Incorporating Solid Operational Frameworks

LLM(Sec)Ops: It’s all about keeping the model's lifecycle smooth and secure, with a focus on streamlining deployment and making sure security is always on.

ML(Sec)Ops: This emphasizes a seamless, automated workflow for machine learning models, making sure security isn't an afterthought but a core component.

The main point here is LLMs require layered security which requires safeguarding these models from the ground up—beginning with pre-training, advancing through fine-tuning, and extending to runtime operations. The key is establishing a robust framework with LLM(Sec)Ops and ML(Sec)Ops to ensure continuous, comprehensive protection.

Next time, we'll explore some challenges and advanced ideas, like hallucinations, Retrieval-Augmented Generation (RAG), and how agents can enhance AI. These concepts will help us see the bigger picture and understand how to manage the complexities of LLMs. Stay tuned—there’s a lot more to discover!

Until then, here’s some further reading…

NSA CISA FBI Best Practices for AI Deployment